1import torch

2

3

4

5nh = 2

6

7nn = 50

8

9fh = torch.nn.Tanh()

10

11model = torch.nn.Sequential()

12model.add_module('layer_1', torch.nn.Linear(1,nn))

13model.add_module('fun_1', fh)

14for layer in range(2, nh+1):

15 model.add_module(f'layer_{layer}', torch.nn.Linear(nn,nn))

16 model.add_module(f'fun_{layer}', fh)

17model.add_module(f'layer_{nh+1}', torch.nn.Linear(nn,1))

18

19

20optim = torch.optim.Adam(model.parameters(),

21 lr = 1e-2)

22

23

24ns = 100

25nepochs = 10000

26nout_loss = 100

27tol = 1e-5

28

29for epoch in range(nepochs):

30

31

32 X = 2.*torch.rand((ns,1)) - 1.

33

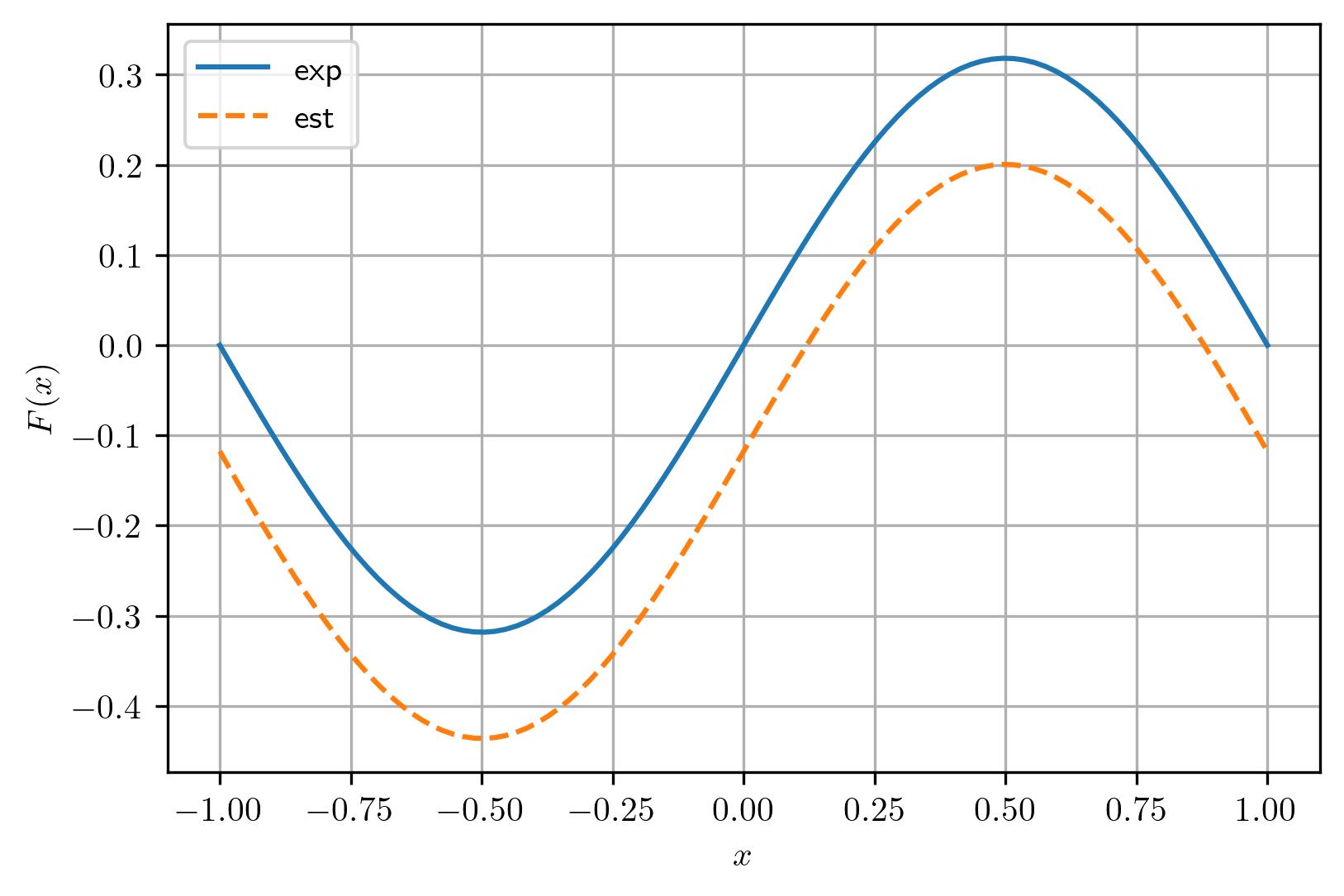

34 f_exp = torch.cos(torch.pi*X)

35

36

37 X.requires_grad = True

38 F = model(X)

39

40 f_est = torch.autograd.grad(

41 F, X,

42 grad_outputs=torch.ones_like(F),

43 retain_graph=True,

44 create_graph=True)[0]

45

46

47 loss = torch.mean((f_exp - f_est)**2)

48

49 if ((epoch % nout_loss) == 0):

50 print(f'epoch {epoch}: loss = {loss.item():.4e}')

51

52 if (loss.item() < tol):

53 print('onvergiu')

54 break

55

56 optim.zero_grad()

57 loss.backward()

58 optim.step()